UPDATE: Elasticsearch has made security free. Please check it out.

Authentication in Elasticsearch without using x-pack or shield. Possible? Yes. In this post I will show you how to do it using excellent readonlyrest plugin written by sscarduzio. The reason I used this plugin was the ease of use as well as the way it works. That it is listed on Elastic website itself as a community contributed security plugin was a big endorsement to it in my books. I will leave configuring SSL configuration between the nodes for a later post.

As usual I will start with WHY followed by HOW. With a RANT in between.

WHY

Elasticsearch does not come with any security baked into it. Anyone with access to the server url can pretty much wipe the whole setup clean via curl commands. Unless you buy X-Pack there is no easy way to create users and give them permissions as what they can do or cannot.

A simple use case. Suppose you are using elasticsearch to store log data. You have to send data to elasticsearch via the client. Now you want to create a user who can only create indices and push data in those indices. He should not be able to touch any other indices and heavens forbid should not be able to delete any data from any indices including the indices he himself has created. Using just elasticsearch as it is this is not possible. You need some way to configure authentication in elasticsearch.

RANT

Recently around 27K MongoDB got ransomwared. A few Elasticsearch instances too got loved. So why elasticsearch does not come with any security? It comes. X-Pack. And it is costs money. They could have provided at least basic authentication in the free version. The reaction of Elasticsearch to this ransomeware episode was to make the training for X-pack free. I expect more security related issues in future.

DISCLAIMER

For production systems if there is money go for X-Pack. Along with other things it also comes with support which will be valuable to avoid pitfalls and troubleshoot issues.

HOW

There are actually quite a few clever ways to enable authentication in elasticsearch cluster. The most common way is to use NGinx. However it depends on parsing the requests to figure out if the actions are to be allowed or blocked. A much cleaner approach is to have have a privilege based system where you have users and privileges are granted to them. Depending on the privilege you can control the access to indices for different users.

So let us start. It is surprisingly simple.

1. Install Elasticsearch. At time or writing it is at version 5.2.2.

2. Install ReadOnlyRest.

Tip: If you are working on Windows machine with an antivirus you might get error like this during install

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: plugin requires additional permissions @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ * java.lang.RuntimePermission accessDeclaredMembers * java.lang.reflect.ReflectPermission suppressAccessChecks * java.security.SecurityPermission getProperty.ssl.KeyManagerFactory.algorithm * java.util.PropertyPermission * read,write See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html for descriptions of what these permissions allow and the associated risks.</code> Continue with installation? [y/N]y Exception in thread "main" java.nio.file.AccessDeniedException: C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873 -> C:\ELK\elasticsearch-5.2.2\plugins\readonlyrest at sun.nio.fs.WindowsException.translateToIOException(WindowsException.java:83) at sun.nio.fs.WindowsException.rethrowAsIOException(WindowsException.java:97) at sun.nio.fs.WindowsFileCopy.move(WindowsFileCopy.java:301) at sun.nio.fs.WindowsFileSystemProvider.move(WindowsFileSystemProvider.java:287) at java.nio.file.Files.move(Files.java:1395) at org.elasticsearch.plugins.InstallPluginCommand.install(InstallPluginCommand.java:503) at org.elasticsearch.plugins.InstallPluginCommand.execute(InstallPluginCommand.java:212) at org.elasticsearch.plugins.InstallPluginCommand.execute(InstallPluginCommand.java:195) at org.elasticsearch.cli.SettingCommand.execute(SettingCommand.java:54) at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:122) at org.elasticsearch.cli.MultiCommand.execute(MultiCommand.java:69) at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:122) at org.elasticsearch.cli.Command.main(Command.java:88) at org.elasticsearch.plugins.PluginCli.main(PluginCli.java:47) Suppressed: java.io.IOException: Could not remove the following files (in the order of attempts): C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873: java.nio.file.DirectoryNotEmptyException: C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873 at org.apache.lucene.util.IOUtils.rm(IOUtils.java:323) at org.elasticsearch.plugins.InstallPluginCommand.install(InstallPluginCommand.java:526) ... 8 more java.io.IOException: Could not remove the following files (in the order of attempts): C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873\guava-21.0.jar: java.nio.file.AccessDeniedException: C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873\guava-21.0.jar C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873: java.nio.file.DirectoryNotEmptyException: C:\ELK\elasticsearch-5.2.2\plugins\.installing-7517334941090603873 at org.apache.lucene.util.IOUtils.rm(IOUtils.java:323) at org.elasticsearch.plugins.InstallPluginCommand.close(InstallPluginCommand.java:618) at org.apache.lucene.util.IOUtils.close(IOUtils.java:89) at org.elasticsearch.plugins.PluginCli.close(PluginCli.java:52) at org.elasticsearch.cli.Command.lambda$main$0(Command.java:65) at java.lang.Thread.run(Thread.java:745)

This is kind of snake oil but I was able to get around it by pressing y and then enter as soon as the install process started. YMMV.

Once the install is complete then all we have to do it to configure the elasticsearch.yml to enable authentication in elasticsearch. The best place to learn everything about configuration is of course the documentation section of the website for this plugin. I will solve the problem of creating a log user for the express purpose of logging only.

Put in this section in your elasticsearch.yml :

readonlyrest:

enable: true

response_if_req_forbidden: Access denied!!!

access_control_rules:

- name: "Accept all requests from localhost"

type: allow

hosts: [XXX.XX.XXX.XXX]

- name: "::Log user::"

auth_key_sha256: 963f4c134808b2762081228d10e13eb1a71c94d05d81ad78c453e01f859a4481

type: allow

actions: ["indices:admin/create","indices:data/write/index","indices:data/write/bulk","indices:data/write/bulk[s]"]

indices: ["log-*"]

- name: "::Kibana server::"

auth_key_sha256: 0152d676c34067be3ad95d376ef067df4b7f10b707a2720fbba30a09c5ea90e0

type: allow

- name: "::Kibana user::"

auth_key_sha256: b26b8e58e0cd42fb6c4aa26698cfd4252b9e09ddd04c4e2db8f35e874c2289d9

type: allow

kibana_access: rw

indices: [".kibana*","log-*"]

Note: Please be very careful of tabs. Use Notepad++ with show symbol turned on.

Line 1-3

This section is simple. We enable the plugin and configure a default message in case the request falls foul of our configured rules.

The real action is packed in “access_control_rules” section.

Line 7-9

The first block defines that all operations are to be allowed when requests are originating from a given IP Address. In most of the cases it is localhost so that one can actually log into the machines running the Elasticsearch and run some commands.

Line 11-15

Let is have a user loguser and let his password will be loguser123.

It is always a recommended practice to generate the auth_key_sha256 using site like this. So we will put the string loguser:loguser123 into the generator and get the hash 963f4c134808b2762081228d10e13eb1a71c94d05d81ad78c453e01f859a4481.

In line 14 we decide what actions this user can do. You can see how the log user can create indices and write data into indices. The last two bulk related actions are there because most of the elasticsearch clients use bulk API to push data. Without these two explicit actions though it will be possible to send data via curl it is not possible to send data via client like Serilog sink for Elasticsearch.

Line 15 configures that the user can only do all the above actions on indices begining with words “log-“. This makes the system more robust. You do not want the user to have access to all the indices!!

Line 17-21

The second block is about Kibana.

First we have to give full access to the Kibana daemon. And this we do in Kibana server block. We create a user name and password combo kibana:kibana123. As usual we will make a hash of it.:-)

Remember to put this in your kibana.yml too.

elasticsearch.username: "kibana" elasticsearch.password: "kibana123"

Now we restrict access to kibana by creating users. So we will name our user kibanauser and his password will be kibanauser123. Again we generate the auth_key_sha256 b26b8e58e0cd42fb6c4aa26698cfd4252b9e09ddd04c4e2db8f35e874c2289d9 using string kibanauser:kibanauser123.

We allow this user to read write to indices starting with .kibana and restrict his access to all the indices starting with log- to just read only.

No access to any other indices.

I am using Serilog sink for Elasticsearch to push data via my clients to Elasticsearch instance. There is a little bit of configuration which is needed on the sink configuration.

I had to add this line to the App.config among other things.

<appSettings>

...

<add key="serilog:write-to:Elasticsearch.nodeUris" value="http://loguser:loguser123@whowhywhat:9200" />

...

</appSettings>

Note that the username and password are written in plain text. Indeed they also go over the network as plain text. But since this user is can only create indices and put data in the elasticsearch cluster and not do anything else (Cannot even read data) it should be fine. My next post will be on how to configure the ssh between the nodes.

And that is it.

Now we have configured authentication in elasticsearch which allows a given user to create indices of certain name and push data into it. Does not even allow that user to read data. Let alone trying to wipe it clean.

Hmmm. So does this configuration of authentication in elasticsearch really work?

Ok here are two scenarios I ran.

1. Trying to create an index log-123 which is allowed to loguser. But forgot to provide credentials!!

$ curl -XPUT 'http://whowhywhat:9200/log-123' -H 'Content-Type: application/json' -d' {"settings" : {"index" : {"number_of_shards" : 3, "number_of_replicas" : 2 }}}'

Access denied!!!

2. Trying to create an index “NotAllowed-123” using credentials!! But forgot that the loguser can create index starting with name log- only!!!

$ curl -u loguser:loguser123 -XPUT 'http://whowhywhat:9200/something' -H 'Content-Type: application/json' -d' {"settings" : {"index" : {"number_of_shards" : 3, "number_of_replicas" : 2 }}}'

Access denied!!!

I can go on. But I will stop here. The best in class security remains the X-Pack. But if you do not have money then configuration of authentication in elasticsearch using this plugin can be a small step towards security.

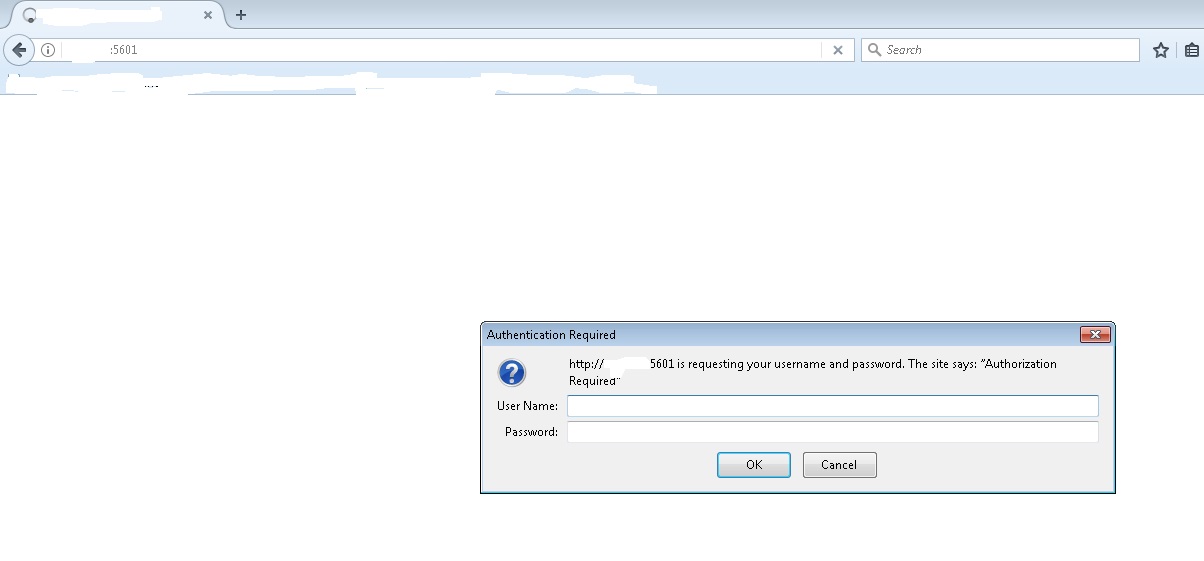

Thanks, great post! One question, is it asking you username and password in Kibana?

Yikes!! I missed out on one critical section. Added it to the blog now. Yes it will ask for authentication. And asks twice. It was raised before also in the forum. I will try to hunt down the link.

You may also want to have a look on Search Guard https://github.com/floragunncom/search-guard

Hi; My question is related to security.I have been using Search-guard +sinklog+elasticsearch+kibana. I added username/password security inside of the elasticsearch. Popup appears always asking me autantication. But I don’t know that how can i sent autorization and autantication info by serilog sink?

I am not very clear about your setup. As long as you access Kibana to view the data then yes at the time of writing it will ask for authentication. As far as passing the credentials to Elasticsearch is concerned you can do it via Serilog App.config. Then your application can send data directly to the Elasticsearch setup you have.

Hi Pankaj – is there a way to connect with you?

You can post here. I an usually prompt in replying.

how to go about setting up ‘encryption’ with ES so the es data at rest/transit is encrypted?

daily Backup/restore functionality with ES (curator for both?)?

On a personal note, would you be interested in helping me for a ES project(paid)? Would be good to connect on skype/phone to discuss further.

How can I setup this with spring boot?

I will suggest that you put the query in the forum for ReadonlyREST.

Hello Pankaj ,

Thanks for sharing this valuable info.I tried the same but i am stuck at this below step.

” I had to add this line to the App.config among other things. ”

I don’t find App.config in serilog folder. Kindly let me know do we have to create it separately and put the piece of code which you have specified ?

Thanks in Advance

Hi Saravana,

A lot has changed since I wrote this post. Maybe I should take this one down. Security is now in built in Elasticsearch and is free. You can easily configure it. There is support for anonymous users so that legacy clients can still send data without the need for the username and password. I think I will put in a post for how to do this. Stay tuned.

Regards

.